Fox News Flash top headlines for June 13

Fox News Flash top headlines for June 13 are here. Check out what's clicking on Foxnews.com

San Francisco prosecutors will use artificial intelligence to reduce racial bias in the courts, becoming the first city to conduct this type of experiment.

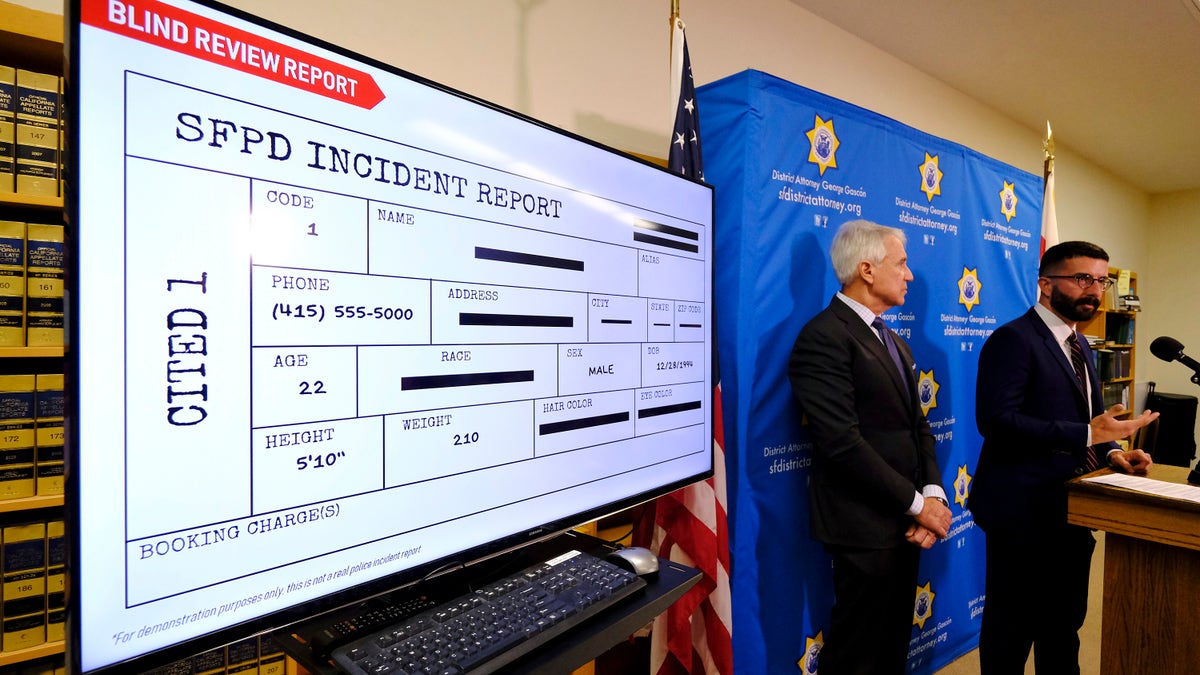

The "bias mitigation tool," which was developed by the Stanford Computational Policy Lab, scans police incident reports and automatically eliminates race information and other details that could identify someone's racial background as a way to prevent prosecutors from being influenced by implicit biases.

“Lady justice is depicted wearing a blindfold to signify impartiality of the law, but it is blindingly clear that the criminal justice system remains biased when it comes to race,” said District Attorney George Gascón in a statement announcing the initiative on Wednesday. “This technology will reduce the threat that implicit bias poses to the purity of decisions which have serious ramifications for the accused, and that will help make our system of justice more fair and just.”

AMAZON'S ALEXA VOICE ASSISTANT ILLEGALLY RECORDS CHILDREN WITHOUT CONSENT, LAWSUIT CLAIMS

The tool, which was built at no cost to the city, works in two phases.

First, the technology redacts information including the names of officers, witnesses and suspects, as well as specific locations, districts, hair and eye color — basically anything that could be used to suggest a person's race.

Acting San Francisco police chief Toney Chaplin is seen above. The city announced a new bias mitigation tool this week. (Getty Images)

After finishing the bias mitigation review, prosecutors will make a preliminary charging decision.

Then, during the second phase, they'll have access to the full unredacted report and any other non-race blind information such as police body camera footage.

Sharad Goel, assistant professor at Stanford University, who is leading the lab’s effort to develop the bias mitigation tool, said in a statement, “The Stanford Computational Policy Lab is pleased that the District Attorney’s office is using the tool in its efforts to limit the potential for bias in charging decisions and to reduce unnecessary incarceration.”

Implicit biases are associations about different groups that are unconsciously held that can result in attributing certain traits to all members of a social group. Those biases or stereotypes could be based on the person's race or gender, for example.

According to the district attorney's office, it will also collect and review metrics to determine the volume and type of cases where charging decisions changed from phase one to phase two as a way to refine the tool and make sure it's working.

GOOGLE MAKES $6M GRANT TO BRING COMPUTER SCIENCE EDUCATION TO UNDERSERVED COMMUNITIES

With a blind police incident report displayed, San Francisco District Attorney George Gascon, left, and Alex Chohlas-Wood, Deputy Director, Stanford Computational Policy Lab, talk about the implementation of an artificial intelligence tool to remove potential for bias in charging decisions, on June 12, 2019, in San Francisco. (AP Photo/Eric Risberg)

"The criminal justice system has had a horrible impact on people of color in this country, especially African Americans, for generations," Gascon said in an interview ahead of the announcement, according to the Associated Press. "If all prosecutors took race out of the picture when making charging decisions, we would probably be in a much better place as a nation than we are today."

The tool will be fully implemented starting July 1, 2019.

Fox News reached out to the San Francisco Police Officers Association for comment on this new tool.

When contacted by Fox News, a spokesperson for the president of the National District Attorneys Association declined to comment.